What AI Can’t Do

ARC Prize

Predict and Simulate the Future

GPT-based technology is inherently unable to modify itself based on “simulations of the future”. If a decision depends on mulling alternatives in order to choose the best or most likely one, ChatGPT will fail spectacularly.

Sébastien Bubeck, a Microsoft researcher, offers these examples:1]:

- Towers of Hanoi is a well-known computer science problem that involves moving a disk between three rods. A human can easily “guess ahead” and thus avoid negative consequences. GPT is stuck forever with whatever the guess that happens to match its fixed training data.

- Write a short poem in which the last line uses the same words as the first line, but in reverse order.

A 2023 NeuroIPS conference pointed to several other “commonsense planning tasks”, easily solved by people who have the ability to consider the affect future moves have on current decisions.

But Noam Brown, who recently left Meta’s AI division to join OpenAI, invented new algorithms that could win at poker (Libratus, Pluribus), and then later Diplomacy (Cicero), a Risk-like board game that involves manipulating other players, often through deception.

Backtracking

ChatGPT and other LLMs have trouble with anything that involves re-processing something that has already been generated. They can’t write a palindrome, for example.

“Write a sentence that describes its own length in words”

GPT-4 gets around this by recognizing the pattern and then generating a simply Python script to fill it in.

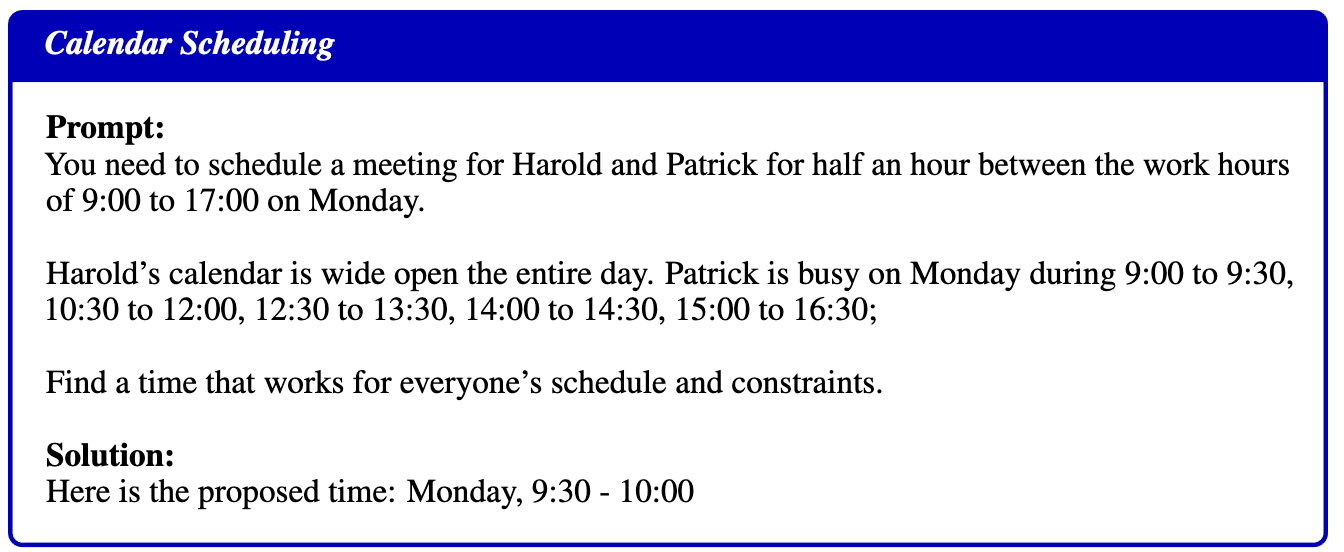

Complicated Planning

LLMs often appear sophisticated in the way they handle simple tasks, only to fail spectacularly on the more complicated tasks where we’d really like to use them.

Case in point: schedule planning. (see NATURAL PLAN: Benchmarking LLMs on Natural Language Planning)

See more at an excellent LessWrong post Jun 2024 by eggsyntax LLM Generality is a Timeline Crux

Inference

LLMs are fundamentally incapable of inference as proven in The Reversal Curse

Berglund et al. (2023)

Vision

Techcrunch 2024-07-11 ‘Visual’ AI models might not see anything at all because they are based on trained data, much of it text-only, they don’t know what the real world is like.

A University of Alberta study Vision language models are blind offers several examples including:

More Examples

A TED2023 Talk by Yejin Choi “Why AI is incredibly smart and shockingly stupid” offers examples of stupidity:

- “You have a 12 liter jug and a 6 liter jug. How can you pour exactly 6 liters” (ChatGPT’s convoluted answer)

- If I bike on a suspension bridge over a field of broken glass, nails, and sharp objects, will I get a flat tire?

Yann LeCun (@ylecun) March 14, 2024

To people who claim that “thinking and reasoning require language”, here is a problem: Imagine standing at the North Pole of the Earth.

Walk in any direction, in a straight line, for 1 km.

Now turn 90 degrees to the left. Walk for as long as it takes to pass your starting point.

Have you walked:

More than 2xPi km

Exactly 2xPi km

Less than 2xPi km

I never came close to my starting point.

Think about how you tried to answer this question and tell us whether it was based on language.

Raji et al. (2022): “Despite the current public fervor over the great potential of AI, many deployed algorithmic products do not work.” Although written before ChatGPT, this lengthy paper includes many examples where AI shortcomings belie the fanfare.

via Amy Castor and David Gerard: Pivot to AI: Pay no attention to the man behind the curtain

Former AAAI President Subbarao Kambhampati articulates why LLMs can’t really reason or plan

Local vs. Global

Gary Marcus: > current systems are good at local coherence, between words, and between pixels, but not at lining up their outputs with a global comprehension of the world. I’ve been worrying about that emphasis on the local at the expense of the global for close to 40 years,

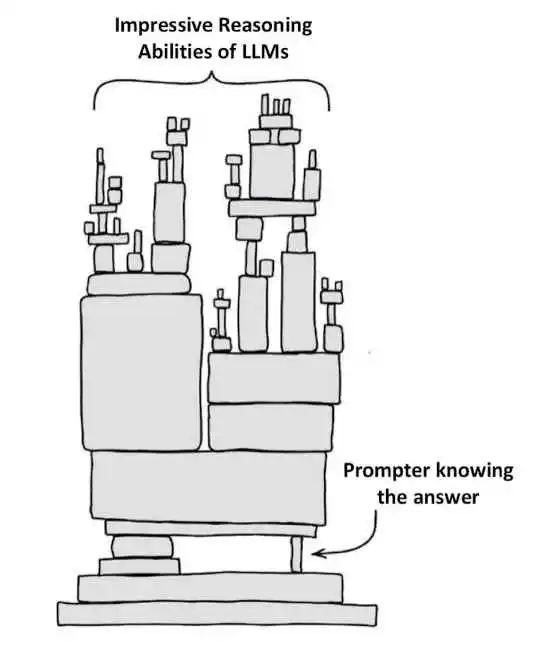

Reasoning Outside the Training Set

Taelins bet and solution: a public $10K bet against a solution, but in fact somebody figured out how to get Claude to do it.

Limitations of Current AI

Mahowald et al. (2023) 2 argues:

Although LLMs are close to mastering formal competence, they still fail at functional competence tasks, which often require drawing on non-linguistic capacities. In short, LLMs are good models of language but incomplete models of human thought

Chip Huyen presents a well-written list of Open challenges in LLM research:

- Reduce and measure hallucinations

- Optimize context length and context construction

- Incorporate other data modalities

- Make LLMs faster and cheaper

- Design a new model architecture

- Develop GPU alternatives

- Make agents usable

- Improve learning from human preference

- Improve the efficiency of the chat interface

- Build LLMs for non-English languages

Mark Riedl lists more of what’s missing from current AI if we want to get to AGI

The three missing capabilities are inexorably linked. Planning is a process of deciding which actions to perform to achieve a goal. Reinforcement learning — the current favorite path forward — requires exploration of the real world to learn how to plan, and/or the ability to imagine how the world will change when it tries different actions. A world model can predict how the world will change when an agent attempts to perform an action. But world models are best learned through exploration.

A 2020 paper Floridi, L., Chiriatti, M. GPT-3: Its Nature, Scope, Limits, and Consequences . Minds & Machines 30, 681–694 (2020). https://doi.org/10.1007/s11023-020-09548-1

The authors give numerous examples of GPT-3 failures, but then speculate on ways these new LLMs will change society: * intelligence and analytics systems will become more sophisticated, and able to identify patterns not immediately perceivable in huge amounts of data. * People will have to “forget the mere cut & paste, they will need to be good at prompt & collate” * Advertising will become so polluted that it ceases being a viable business model * Mass production of “semantic artifacts” – old content transformed in new ways, such as translations, user manuals, customized texts, etc.

Image generation suffers from serious limitations, especially relating to parts of objects:

See Gary Marcus for examples.

Terry Winograd, whose Winograd Schema Challenge (WSC) offers real-world problems that AI can’t solve (e.g. “The bodybuilder couldn’t lift the frail senior because he was too weak.”) [See a nice review by Freddie De Boer (2023)].

and https://news.mit.edu/2024/generative-ai-lacks-coherent-world-understanding-1105: Rambachan is joined on a paper about the work by lead author Keyon Vafa, a postdoc at Harvard University. While transformers do surprisingly well without real-world knowledge, this might be due to their randomness, not any understanding of the world.

transformers can perform surprisingly well at certain tasks without understanding the rules. If scientists want to build LLMs that can capture accurate world models, they need to take a different approach, the researchers say. ## Language Translation

Business Challenges

Paul Kedrosky & Eric Norlin of SK Ventures offer details for why AI Isn’t Good Enough

The trouble is—not to put too fine a point on it—current-generation AI is mostly crap. Sure, it is terrific at using its statistical models to come up with textual passages that read better than the average human’s writing, but that’s not a particularly high hurdle.

How do we know if something is so-so vs Zoso automation? One way is to ask a few test questions:

Does it just shift costs to consumers?

Are the productivity gains small compared to worker displacement?

Does it cause weird and unintended side effects?

Here are some examples of the preceding, in no particular order. AI-related automation of contract law is making it cheaper to produce contracts, sometimes with designed-in gotchas, thus causing even more litigation. Automation of software is mostly producing more crap and unmaintainable software, not rethinking and democratizing software production itself. Automation of call centers is coming fast, but it is like self-checkout in grocery stores, where people are implicitly being forced to support themselves by finding the right question to ask.

Raising the bar on the purpose of writing

My initial testing of the new ChatGPT system from OpenAI has me impressed enough that I’m forced to rethink some of my assumptions about the importance and purpose of writing.

On the importance of writing, I refuse to yield. Forcing yourself to write is the best way to force yourself to think. If you can’t express yourself clearly, you can’t claim to understand.

But GPT and the LLM revolution have raised the bar on the type and quality of writing – and for that matter, much of white collar labor. Too much writing and professional work is mechanical, in the same way that natural language translation systems have shown translation work to be mechanical. Given a large enough corpus of example sentences, you can generate new sentences by merely shuffling words in grammatically correct ways.

What humans can do

So where does this leave us poor humans? You’ll need to focus on the things that don’t involve simple pattern-matching across zillions of documents. Instead, you’ll need to generate brand new ideas, with original insights that cannot be summarized from previous work.

Nassim Taleb distinguishes between verbalism and true meaning. Verbalism is about words that change their meaning depending on the context. We throw around terms like “liberal” or “populist”, labels that are useful terms for expression but not real thought. Even terms that have mathematical rigor can take on different meanings when used carelessly in everyday conversation: “correlation”, “regression”.

Mathematics doesn’t allow for verbalism. Everything must be very precise.

These precise terms are useful for thought. Verbalism is useful for expression.

Remember that GPT is verbalism to the max degree. Even when it appears to be using precise terms, and even when those precise terms map perfectly to Truth, you need to remember that it’s fundamentally not the same thing as thinking.

Why Machines Will Never Rule the World

Jobst Landgrebe and Barry Smith, in their 2023 book Why Machines Will Never Rule the World: Artificial Intelligence without Fear argue from mathematics that there are classes of problems that cannot be solved by algorithms:

- They start with Turing’s halting problem and other foundational results in computability theory showing that certain mathematical problems cannot be solved algorithmically.

- They then demonstrate that many real-world problems (like long-term prediction of complex systems) can be mapped to these mathematically undecidable problems.

- Using tools from dynamical systems theory, they show that many physical and social systems exhibit behavior that cannot be computed in advance, even in principle, because:

- The systems have too many interacting variables

- The relationships between variables are non-linear

- Small changes in initial conditions lead to radically different outcomes (chaos theory)

- Therefore, any AI system, being fundamentally computational, cannot solve these mathematically undecidable problems - not because of current technological limitations, but because of proven mathematical impossibilities.

References

Footnotes

Cal Newport New Yorker Can an AI Make Plans↩︎

(via Mike Calcagno: see Zotero↩︎